Tolk- AI Headset

A wearable device as a stepping stone to a post-smartphone world, powered by AI, customised by modularity.

This project was a way for me to explore AI as both a consumer technology and as a part of the design process.

Personal project (with my buddy David)

Location: Gothenburg

Year: 2025

Role: The lot

Smartphone Addiction

The smartphone has been a feature of modern life for 15 years or more, but the form factor hasn’t changed, and the social issues of being absorbed into a screen are widely felt.

Tolk imagines a transitionary hardware that bridges from the smartphone into a more customised, connected and human device .

A few devices have attempted to break the mold. Google Glass tried the full HUD display, but people found it intrusive, and too geek-chic.

The rabbit R1 tried to push Large Action Models, but ended up being just a more-awkward-to-use smartphone.

The Humane Pin had immense ambitions, with AI and an integrated projector, but the hardware couldn’t cope with outdoor light.

Valiant Failures

User Challenges

What are to pain points for smart technology? using some focus groups with friends and desk research, the issues were boiled down to 3 key challenges.

Privacy

People find AI tech creepy.

Google Glass failed because it felt like an invasion of privacy, How can we reassure users and audiences that they aren’t being spied on?

Subtle Use

People find wake words awkward.

Siri and voice assistants are awkward to use in public, what can be done voice free, and what are the alternatives to speaking aloud?

Connection

People find technology isolating.

Smartphones and earbuds are physical blocks to the outside world, How can we stay connected to both the digital and real worlds?

Introducing Tolk

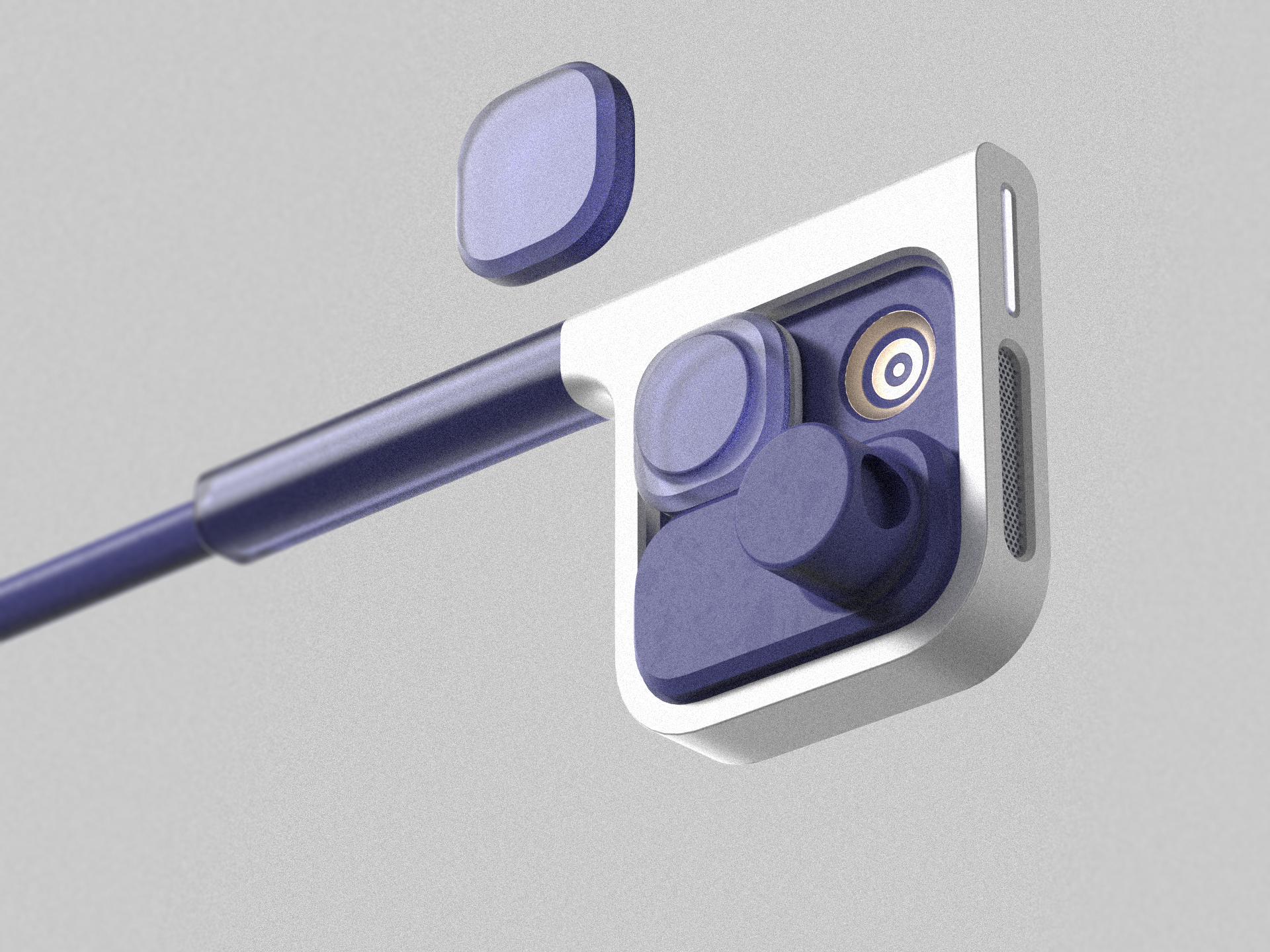

Tolk is a modular and connected headset, with an open-ear design and a focus on privacy.

Connection

Tolk uses an open-ear audio interface, meaning that that no senses are obscured- no muffling earbuds or headache inducing displays.

Privacy

Action buttons allow microphones to be off by default, with clear LED indicators to tell those around you they are active.

The optional camera module is physically blocked by default, with LCD privacy film optically obscuring the lens when not activated by the user.

Subtle Use

Who Says “Hey Siri” in Public?

Key actions are mapped to action buttons, things like live translation, Visual Intelligence or custom routines are initiated without a word spoken.

Modules can be customised to taste and each users’ unique preferences, and opens the door to a modular ecosystem for future development.

Use Cases

Visual Intelligence

(Camera Activated, Cross reference location with image)

〰️

Ah! this Painting is a rare example of Norman Rockwell's Still life...

〰️

(Camera Activated, Cross reference location with image) 〰️ Ah! this Painting is a rare example of Norman Rockwell's Still life... 〰️

The optional camera module allows users to access visual intelligence on demand, This gives the wearer an extra guide to the world, but only when they want it.

Custom Actions

(Home Time routine activated)

〰️

Cecelia knows you're on your way, and I've queued up the next episode of The Rest is...

〰️

(Home Time routine activated) 〰️ Cecelia knows you're on your way, and I've queued up the next episode of The Rest is... 〰️

Programmable action buttons allow the wearer to activate routines at the click of a button, either suggested by talk after learning users habits, or customised by the user via voice or smartphone input.

Live Translation

(language detected: Italian)

〰️

"This deal could open up a big opportunity for us...

〰️

(language detected: Italian) 〰️ "This deal could open up a big opportunity for us... 〰️

Basic functionality gives live translation right to your ear, with replies played from the front facing speakers. Text translation can also be accessed via the optional camera module.

Process and Reflections

This project was an opportunity for me to experiment with AI visualisation tools to see where they are most useful. Here are some of my reflections on the design process.

ChatGPT was good for basic image generation—my “studio shot” model was entirely AI-made and easy to iterate (e.g. turning a side profile into a front view). But the cracks showed when adding the product: the headset sits over the ear, and ChatGPT consistently misread it, at one point turning it into a pair of glasses.

I fell back on a more traditional workflow: using the AI model as a backdrop in Keyshot, rendering the product under roughly matched lighting, then finishing in Photoshop or Procreate. Old-school methods still give far more control and consistency.

Adding a pair of well documented headphones (shokz bone conduction headphones) goes pretty smoothly.

When prompted with a Tolk image there wasn’t much control.

I had higher hopes for Vizcom, especially for CMF and form exploration. Even with a LoRA trained on a moodboard, it struggled to parse the design language or form factor I was aiming for. Its real strength was image-to-video: I used it heavily for people shots. Prompting was crucial, though subtle motions, like hand movements, were often clumsy. Still, video helped disguise rougher Photoshop work; motion naturally blends the seams.

Attempt 1: A man conjures a VR headset from the Ether.

Attempt 2: A man gets a migraine and an existential crisis.

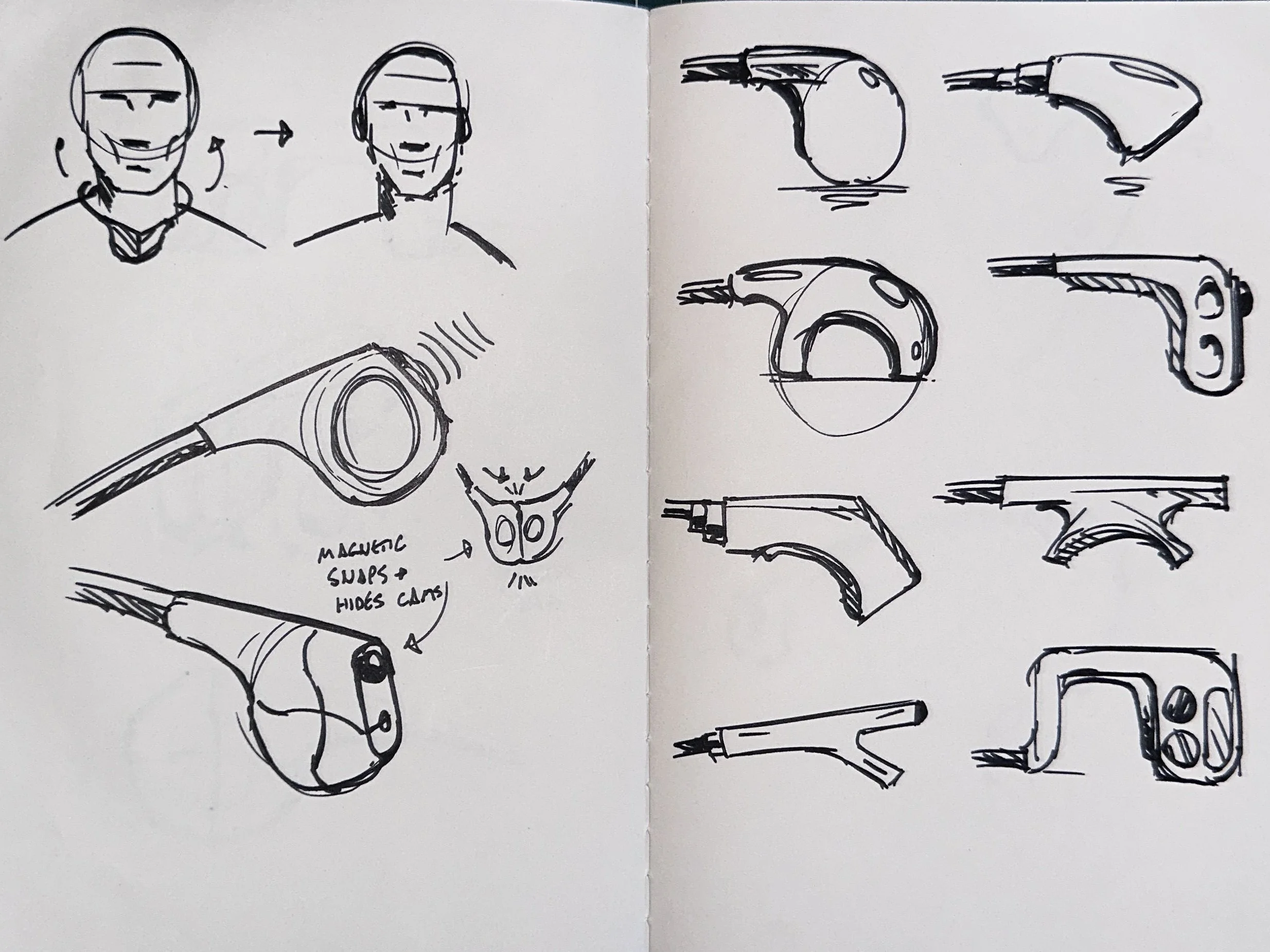

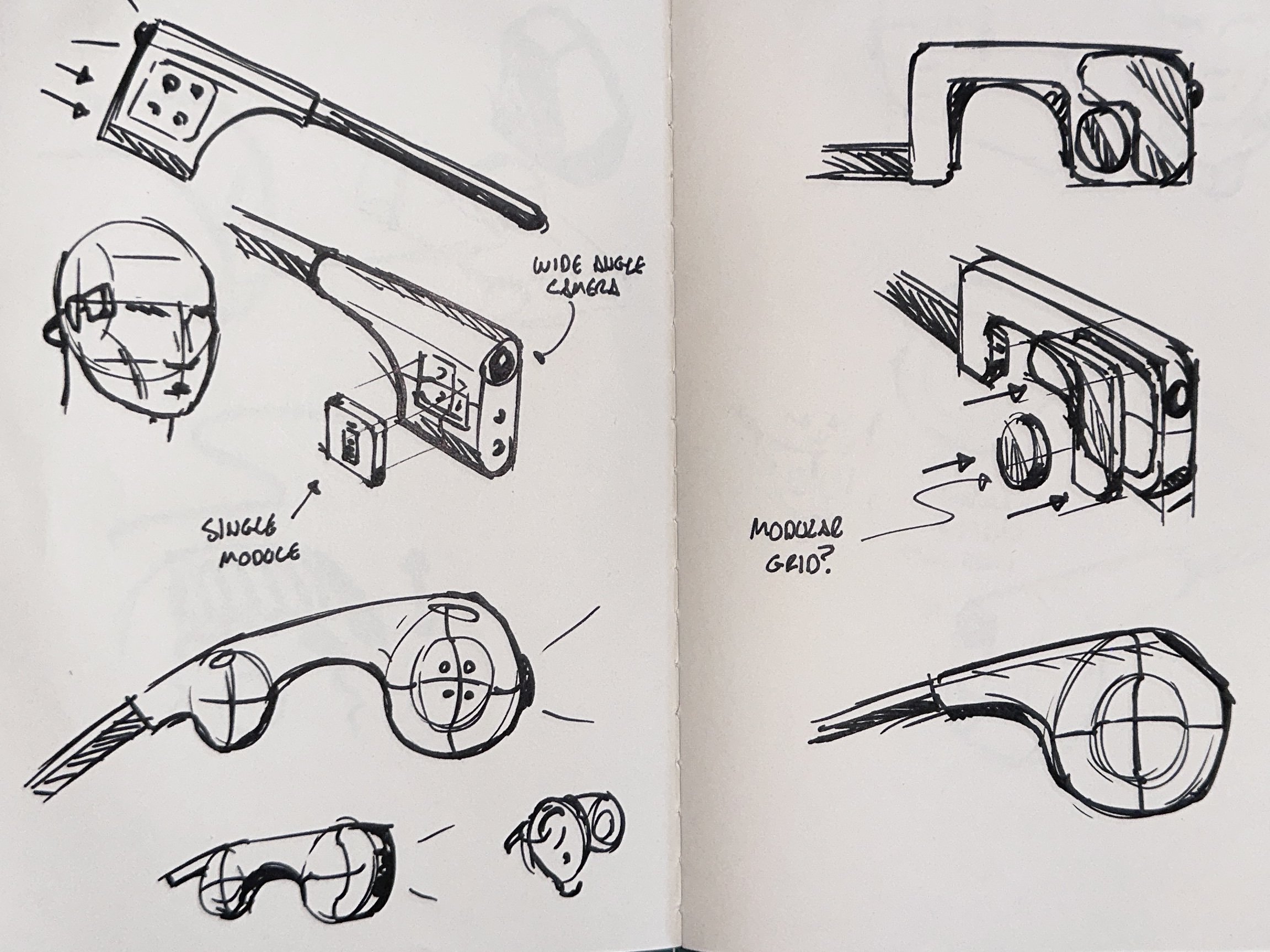

So in the end the actual design process remained pretty traditional: sketching, 3D prints, feedback from friends, CAD in Fusion, and rendering in Keyshot.

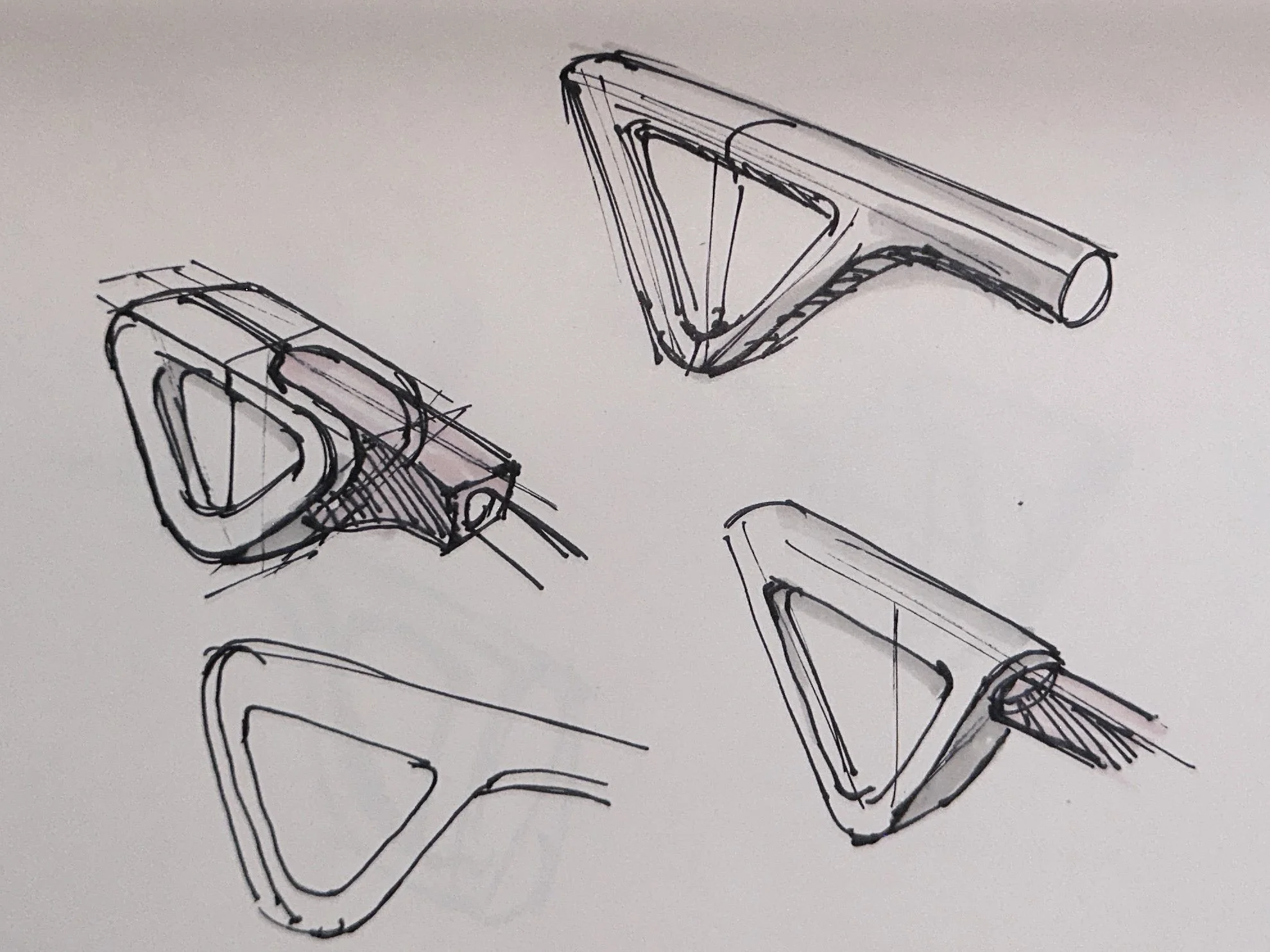

I explored different geometries before settling the square design. spending some time on a fully rounded form. I gave up on this since the modularity of the square design (the Tetris modules) fitted the challenges and aesthetic wants better. I made an initial design with larger fillet radii, but after a chat with designer Jonny Tran I tightened up the fillets for a cleaner, sharper overall look.

The AI tools were great in parts, especially as an aid to hero shots and use-case visualisation, and I’m sure they will improve over time, but the roulette wheel of GenAI is still too hit or miss for me to rely on regularly.

Going ‘round the bend.

Version 1 needed a little extra finesse.

Project snapshots